In this blog post, we will go over the quick basic steps to get you started on how to get the Reverb G2 or Reverb G2 Omnicept Edition headset tracking on Unity using OpenXR. For this, we will be using Unity Engine LTS (2020.3) and the OpenXR package.

Setting up the project packages

The first thing we will need to do is set up the project by enabling XR Plug-in Management and OpenXR. To do so, open Project Settings enable Unity's XR Plugin Management and check OpenXR (Unity will install the OpenXR package). Unity may restart to configure the Action-Based Input System

Next, download and install the Windows Mixed Reality Feature Tool. This tool will allow us to install the Mixed Reality OpenXR plugin that gives us access to different OpenXR MSF extensions that we will use later on.

Then we add the HP Reverb G2 Controller Profile to the Interaction profile of OpenXR settings.

Enabling tracking

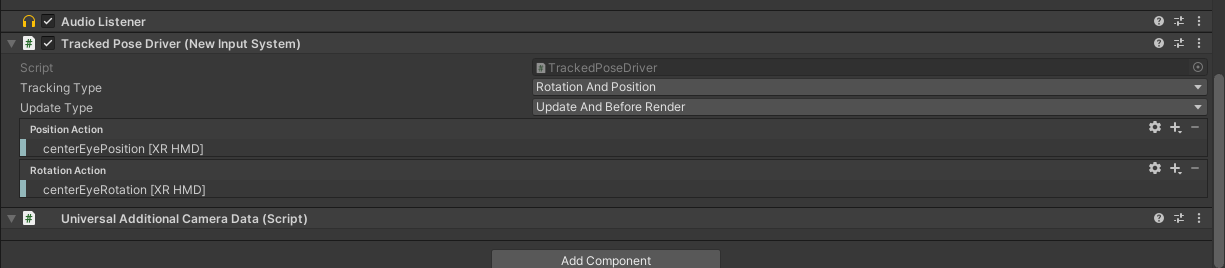

Convert your Main Camera to an XR Rig in your scene. You will notice this adds a Tracked Pose Driver to your Main Camera where you can reference the actions that reference the camera position and rotation.

Your headset should be tracking now.

Tracking your controllers:

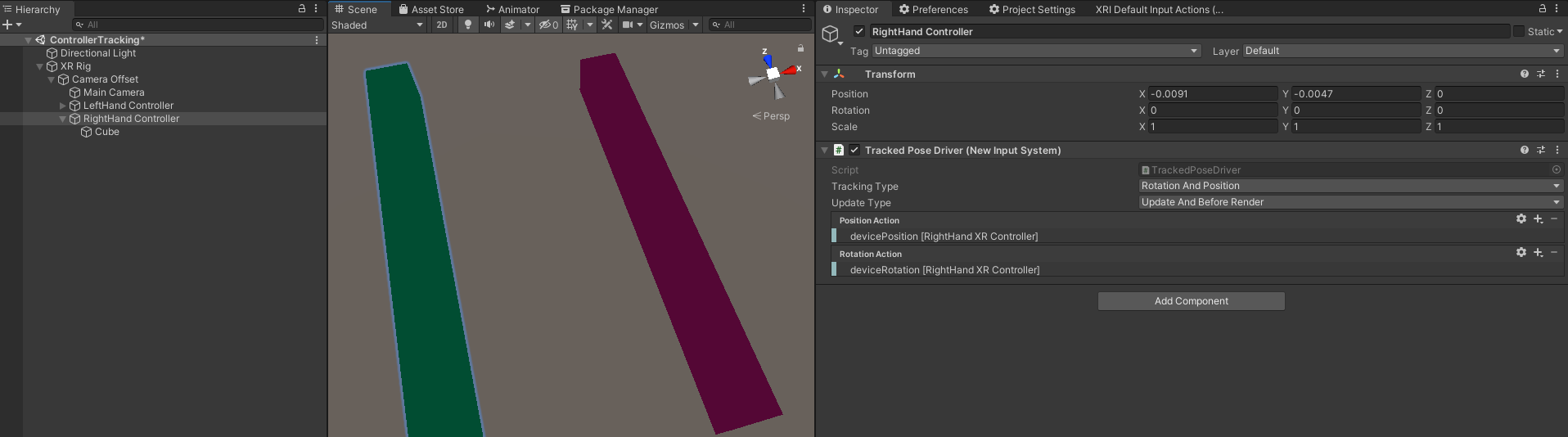

We start by adding controller tracking support. Add a new GameObject under XRRig>Camera Offset and name it RightHand Controller and add another gameobject for LeftHand Controller. To these GameObjects, we add the Tracked Pose Driver (New Input) script and set the Rotation/Positon to an XRController (Left and Right) Rotation/Position action.

As follows:

- For position, we will use XR Controller->Right/Left->devicePosition

- For rotation, we will use XR Controller->Right/Left->Optional Controls->pointerRotation

Now we will create a cube GameObjects as children of LeftHand/RightHand controller so we have a test representation of the controllers.

Now, we hit play and watch how we can move around the controller representation cubes.

[Optional] Input actions

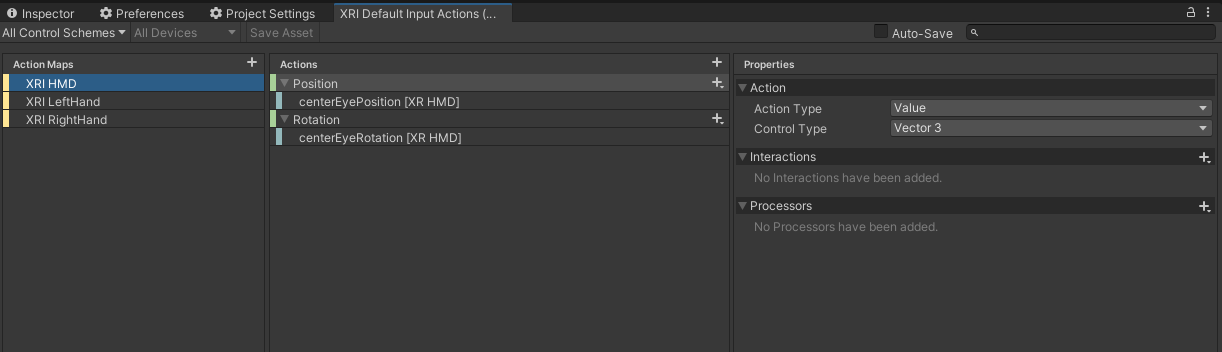

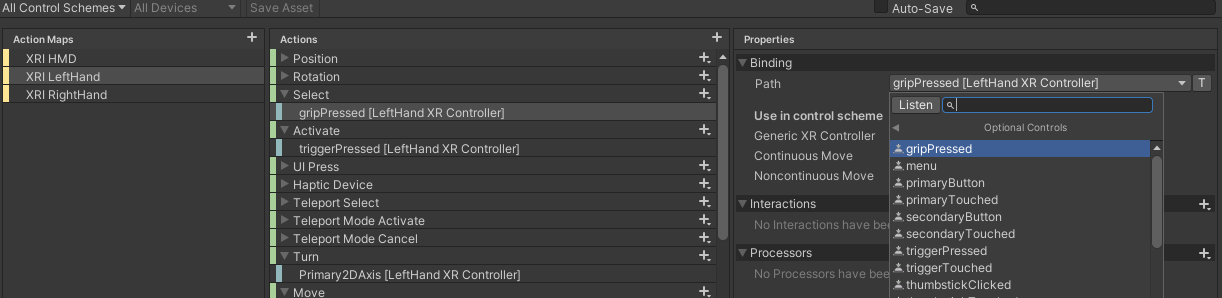

As we will be using the new input system we will first create a new input action asset (in the Project window: Right Click/Create/Input Actions) and then define action maps for the HMD and controllers. On the action maps we will define the position and rotation actions bonded to the position path as follows:

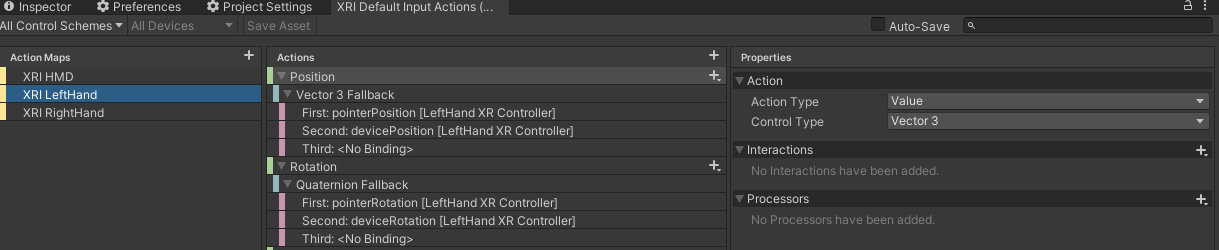

Do the same for the controllers:

We can also include actions for the controller buttons so we can read those actions to drive interactions on our application. For this, we will create an Action and map it to the controller primary button for example:

Listening to controller buttons

Now that we defined the actions we can use the following code to listen to them:

private void Start()

{

selectAction.action.Enable();

selectAction.action.performed += SelectActivation;

}

private void SelectActivation(InputAction.CallbackContext context)

{

Debug.Log($"Phase {context.phase}");

}Additionally, for VR you may need a ray controller, teleport, and many other functionalities. For this, we will recommend using an interaction toolkit such as Unity XR Interaction Toolkit (Toolkit | Samples) or MRTK.

Bonus Track - Using Motion Controller Model

When we started the tutorial we imported the motion controller model extension. Now, we will use this one to query the model key and then get the data of the model for the controller. The data is returned on gltf format and for this, we will be using a gltf loader: https://github.com/atteneder/glTFast.

The code to get and load the model will look similar to:

public async void LoadControllers()

{

controller = handedness == Handedness.Left ? ControllerModel.Left : ControllerModel.Right;

if (controller.TryGetControllerModelKey(out ulong modelKey))

{

Task controllerModelTask = controller.TryGetControllerModel(modelKey);

await controllerModelTask;

if (controllerModelTask.Status == TaskStatus.RanToCompletion)

{

Debug.Log("Loaded controller data");

var gltf = new GltfImport();

bool success = await gltf.LoadGltfBinary(controllerModelTask.Result);

if (success)

{

success = gltf.InstantiateMainScene(transform);

}

else

{

Debug.LogError("Failed to load model");

}

}

else

{

Debug.LogError("Failed to load controller model");

}

}

else

{

Debug.LogError("Error getting controller model key");

}

}

First we query the controller model key and then we use this to get an array of bytes that represent the controller mode. Finally, we load this:

Now you should be ready to go with tracking and view your controller in Unity using OpenXR. Let us know if you have any questions!